Configuration Guide

4 minute read

Config

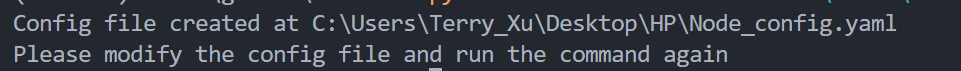

python -m NodeRAG.build -f path/to/main_foulder

When you first use this command, it will create Node_config.yaml file in the main_folder directory.

You need to modify the config file according to the following instructions (add API and service provider) to ensure that NodeRAG can access the correct API.

#==============================================================================

# AI Model Configuration

#==============================================================================

model_config:

service_provider: openai # AI service provider (e.g., openai, azure)

model_name: gpt-4o-mini # Model name for text generation

api_keys: # Your API key (optional)

temperature: 0 # Temperature parameter for text generation

max_tokens: 10000 # Maximum tokens to generate

rate_limit: 40 # API rate limit (requests per second)

embedding_config:

service_provider: openai_embedding # Embedding service provider

embedding_model_name: text-embedding-3-small # Model name for text embeddings

api_keys: # Your API key (optional)

rate_limit: 20 # Rate limit for embedding requests

#==============================================================================

# Document Processing Configuration

#==============================================================================

config:

# Basic Settings

main_folder: C:\Users\Terry_Xu\Desktop\HP # Root folder for document processing

language: English # Document processing language

docu_type: mixed # Document type (mixed, pdf, txt, etc.)

# Chunking Settings

chunk_size: 1048 # Size of text chunks for processing

embedding_batch_size: 50 # Batch size for embedding processing

# UI Settings

use_tqdm: false # Enable/disable progress bars

use_rich: true # Enable/disable rich text formatting

# HNSW Index Settings

space: l2 # Distance metric for HNSW (l2, cosine)

dim: 1536 # Embedding dimension (must match embedding model)

m: 50 # Number of connections per layer in HNSW

ef: 200 # Size of dynamic candidate list in HNSW

m0: # Number of bi-directional links in HNSW

# Summary Settings

Hcluster_size: 39 # Number of clusters for high-level element matching

# Search Server Settings

url: '127.0.0.1' # Server URL for search service

port: 5000 # Server port number

unbalance_adjust: true # Enable adjustment for unbalanced data

cross_node: 10 # Number of cross nodes to return

Enode: 10 # Number of entity nodes to return

Rnode: 30 # Number of relationship nodes to return

Hnode: 10 # Number of high-level nodes to return

HNSW_results: 10 # Number of HNSW search results

similarity_weight: 1 # Weight for similarity in personalized PageRank

accuracy_weight: 1 # Weight for accuracy in personalized PageRank

ppr_alpha: 0.5 # Damping factor for personalized PageRank

ppr_max_iter: 2 # Maximum iterations for personalized PageRank

Configuration

service provider

We currently support model service providers such as openai and genmini. For embedding models, we support openai_embedding and gemini embedding. We strongly recommend using openai as the model provider. This is because openai’s structure decoding functionality can effectively follow structured decomposition prompts to generate heterogeneous graphs, providing great stability. Unfortunately, the current gemini models and other models (eg. DeepSeek), even the latest gemini 2.0, have shown instability in structured outputs. We discussed this issue in this blog.

Available Models

When you choose a service provider, you can use all the currently available models they offer.

For example, if you choose openai as the service provider, you can use all the models listed in the latest models on their website.

Here are some examples of available models for openai:

- gpt-4o-mini

- gpt-4

- gpt-4o

For embedding models, openai_embedding offers:

- text-embedding-3-small

- text-embedding-3-large

Please refer to the Embeddings for the most up-to-date list of available models.

Rate Limit

OpenAI accounts are subject to rate limits, including restrictions on the number of requests and tokens per minute. You can adjust the request rates for both the embedding model and the language model to control usage spikes by setting appropriate rate limits.

For more information on account tiers and specific rate limits, see the OpenAI Rate Limits Guide.

Language

Currently, English and Chinese are supported. To add support for additional languages, modify the prompt-related text and update the language return field in promanager accordingly. See Prompt Fine-Tuning for more details.

Embedding Dimension

dim represents the number of dimensions in the embedding vector. Please ensure this value matches the embedding model you are using. For example, OpenAI’s embedding dimension is 1536, while Gemini’s embedding dimension is 768.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.