NodeRAG Reproduce

🔬 We provide a complete reproduction repository that contains our implementations of NaiveRAG, HYDE, and NodeRAG, along with snapshot versions and parameter settings of LightRAG and GraphRAG used in our experiments.

📊 Most of the benchmarks used in the experiments have been processed. You can find processing details and our rationale in the benchmark section, as well as guidance on how to quickly obtain usable data.

🔗 Our reproduction repository is available at NodeRAG_reproduce.

1 - Benchmarks

Explore the benchmarks used in NodeRAG experiments

This section details the benchmark datasets and how they were processed.

Benchmarks

We observe that many current benchmarks no longer align with modern RAG settings. Traditional RAG benchmarks work with paragraphs, where relevant paragraphs are selected from a limited set before LLM generates an answer. Current RAG settings more closely resemble real-world scenarios where we have a raw corpus that is processed directly for retrieval and answering. Therefore, we modified existing multi-hop datasets by merging all paragraphs into a single corpus and evaluating final answers. Recognizing that RAG primarily focuses on retrieval system quality, we maintained consistent question-answering settings across evaluations to ensure fair comparisons.

raw corpus

You should merge all corpora into a single corpus, then use the indexing functionality of each RAG system to index it into their respective databases.

answer and evaluation

Save your questions and answers as keys in a parquet file format. You can then directly use our provided “LLM as judge” script for testing.

benchmarks

We provide most of the datasets we used, which have been processed into an easy-to-use format. However, due to copyright requirements for some datasets, please contact any of the authors to obtain our processed datasets and evaluation parquet files.

1.1 - RAG-QA-ARENA

RAG-QA-ARENA is a preference-based comparison dataset, for which we provide detailed tutorials

Request for data

You can obtain the dataset by emailing any of the authors.

Process

You will find a RAG Arena folder in Google Drive. Place the data files from this folder into the rag-qa-arena folder in your GitHub repository.

Index and Answer

Add the -a flag to the original command to skip evaluation and obtain the raw parquet file.

For example,

python -m /eval/eval_node -f path/to/main_folder -q path/to/question_parquet -a

Use change.ipynb in the rag-qa-arena folder to convert the parquet to the evaluation JSON format. Place the processed JSON files in the data/pairwise_eval folder, following this structure:

📁 rag-qa-arena

└── 📁 data

└── 📁 pairwise_eval

└── 📁 GraphRAG

├── 📄 fiqa.json

├── 📄 lifestyle.json

├── 📄 recreation.json

├── 📄 science.json

├── 📄 technology.json

└── 📄 writing.json

└── 📁 NodeRAG

├── 📄 fiqa.json

├── 📄 lifestyle.json

├── 📄 recreation.json

├── 📄 science.json

├── 📄 technology.json

└── 📄 writing.json

└── 📁 NaiveRAG

├── 📄 fiqa.json

├── 📄 lifestyle.json

├── 📄 recreation.json

├── 📄 science.json

├── 📄 technology.json

└── 📄 writing.json

Compare with LFRQA directly.

Modify the script by adding your openai_key.

For mac and linux,

bash run_pairwise_eval_lfrqa.sh

For Windows,

run_pairwise_eval_lfrqa.bat

Compare a pair of LLM generations.

Modify the script by adding your openai_key.

For mac and linux,

bash run_pairwise_eval_llms.sh

For windows,

run_pairwise_eval_llm.bat

You should modify model1 and model2 to ensure each model is compared against every other model. For example, you can compare NaiveRAG against the other four models, then compare Hyde against the remaining three models (excluding NaiveRAG), and so on until all pairwise comparisons are complete.

3.2 Complete Pairs

python code/report_results.py --use_complete_pairs

This script reports win and win+tie rate for all comparison, and output an all_battles.json .

2 - NaiveRAG

Explore the NaiveRAG baseline implementation

This section details how to reproduce NaiveRAG results.

Index of NaiveRAG

NaiveRAG can use the NodeRAG environment. If you have installed the NodeRAG conda environment, you can directly use NaiveRAG for indexing. If you haven’t installed the NodeRAG environment yet, please refer to the Quick Start guide in the documentation.

You need a folder structure similar to NodeRAG. Create a main working directory called main_folder and place an input folder inside it. Put the files you want to index in the input folder.

main_folder/

├── input/

│ ├── file1.md

│ ├── file2.txt

│ ├── file3.docx

│ └── ...

Then run

python -m NaiveRAG.build -f path/to/main_folder

Answer and Evaluation

First, prepare your test questions according to the benchmark format. You’ll need to create a test set parquet file containing questions and their corresponding answer keys. Once ready, you can run the evaluation with:

python -m /eval/eval_naive -f path/to/main_folder -q path/to/question_parquet

3 - HYDE

Explore the HYDE implementation

This section details how to reproduce HYDE results.

Index of HyDE

HYDE can use the same indexing base as NaiveRAG. Therefore, after completing the NaiveRAG indexing process, you can directly use its index base.

Answer and Evaluation

First, prepare your test questions according to the benchmark format. You’ll need to create a test set parquet file containing questions and their corresponding answer keys. Once ready, you can run the evaluation with:

python -m /eval/eval_hyde -f path/to/naive_main_folder -q path/to/question_parquet

4 - GraphRAG

Explore the GraphRAG implementation

This section details how to reproduce GraphRAG results.

Index of GraphRAG

To ensure experimental consistency and command availability, please follow these instructions to install GraphRAG:

conda create -n graphrag python=3.9

conda activate graphrag

pip install graphrag==1.2.0

graphrag init --root path/to/main_folder

This will create two files in the main_folder directory:

More details about GraphRAG configuration and usage can be found in the official documentation.

Then index by running

graphrag index --root path/to/main_folder

Answer and Evaluation

First, prepare your test questions according to the benchmark format. You’ll need to create a test set parquet file containing questions and their corresponding answer keys. Once ready, you can run the evaluation with:

python -m /eval/eval_graph -f path/to/main_folder -q path/to/question_parquet

5 - LightRAG

Explore the LightRAG implementation

This section details how to reproduce LightRAG results.

Index of LightRAG

LightRAG is a snapshot from our experiments, with parameters, functions, and prompts fine-tuned to return statistical data and use unified prompts. To get started, you should first create a new environment and install the LightRAG dependencies.

conad create -n lightrag python=3.10

conda activate lightrag

cd LightRAG

pip install -e .

Similar to other RAG implementations, you need to create a main working directory called main_folder and place an input folder inside it to store your corpus files.

main_folder/

├── input/

│ ├── file1.md

│ ├── file2.txt

│ ├── file3.docx

│ └── ...

Then run

python -m Light_index -f path/to/main_folder

Answer and Evaluation

First, prepare your test questions according to the benchmark format. You’ll need to create a test set parquet file containing questions and their corresponding answer keys. Once ready, you can run the evaluation with:

python -m /eval/eval_light -f path/to/main_folder -q path/to/question_parquet

6 - NodeRAG

Explore the NodeRAG implementation

This section details how to reproduce NodeRAG results.

Indexing of NodeRAG

python -m NodeRAG.build -f path/to/main_foulder

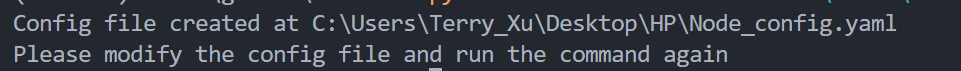

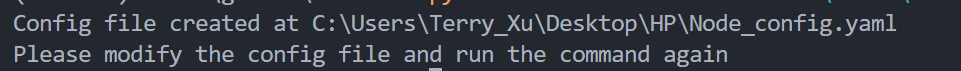

When you first use this command, it will create Node_config.yaml file in the main_folder directory.

Modify the config file according to the following instructions (add API and service provider) to ensure that NodeRAG can access the correct API.

To quickly use the NodeRAG demo, set the API key for your OpenAI account. If you don’t have an API key, refer to the OpenAI Auth. Ensure you enter the API key in both the model_config and embedding_config sections.

For detailed configuration and modification instructions, see the Configuration Guide.

#==============================================================================

# AI Model Configuration

#==============================================================================

model_config:

model_name: gpt-4o-mini # Model name for text generation

api_keys: # Your API key (optional)

embedding_config:

api_keys: # Your API key (optional)

Building

After setting up the config, rerun the following command:

python -m NodeRAG.build -f path/to/main_folder

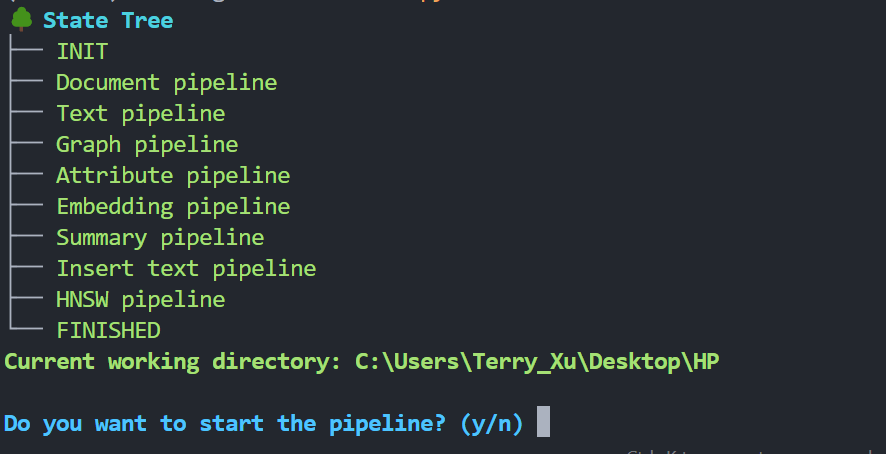

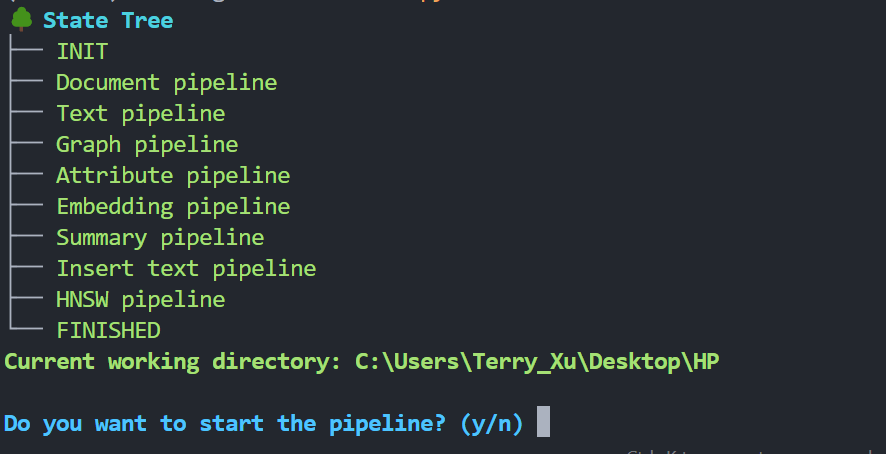

The terminal will display the state tree:

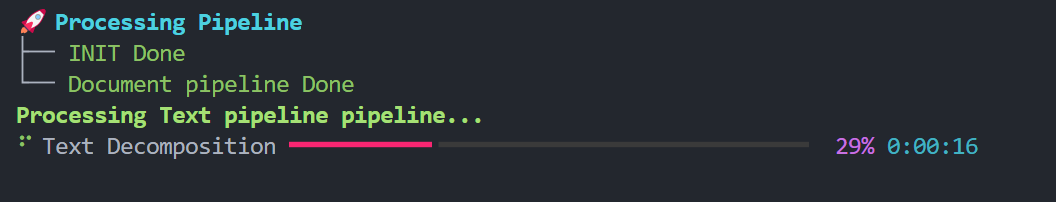

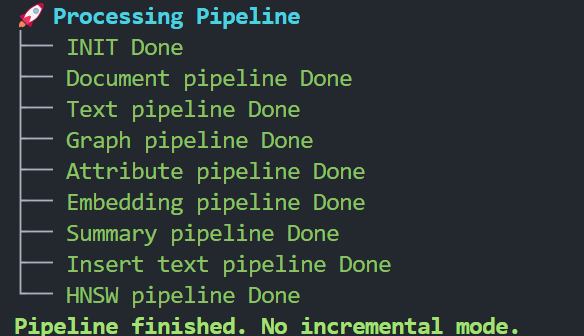

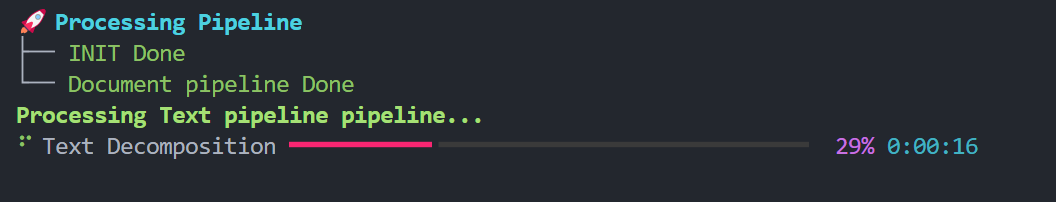

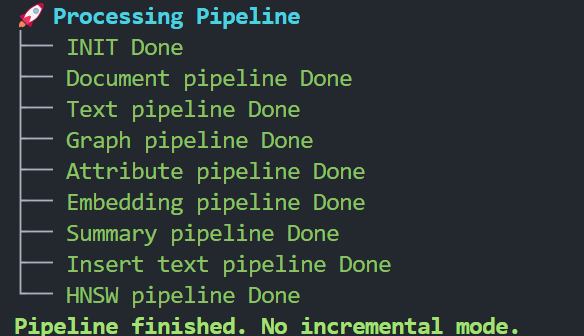

Press y to continue. Wait for the workflow to complete.

For more detailed information about NodeRAG configuration, indexing, and usage, please refer to our documentation.

Answer and Evaluation

First, prepare your test questions according to the benchmark format. You’ll need to create a test set parquet file containing questions and their corresponding answer keys. Once ready, you can run the evaluation with:

python -m /eval/eval_node -f path/to/main_folder -q path/to/question_parquet